Is AI a Solution Looking for a Problem

Are ChatGPT, Claude, Grok, and others actually getting results?

The Suno AI Co-Founder made me question everything about AI.

Mike Shulman Co-Founded Suno AI, an AI music company.

He said: “I think the majority of people don’t enjoy the majority of the time they spend making music.”

I stared at my laptop for a second. "He thinks people learn guitar to save time?"

I see kids spending three hours trying to play one Beatles song badly. They're grinning the entire time.

"What's the point of using AI here?" I thought. "There's no need for it. It's a nice-to-have to make music quickly. But not a necessity."

But the problem is more than writing music. It’s AI companies desperately grasping for problems to solve. Music, writing, coding, education, health.

They're swinging their AI hammer at everything, looking for nails.

I looked into their actual business models, starting with OpenAI, and found a $30B chasm that isn’t discussed often.

AI companies are losing money year after year.

If AI companies like OpenAI stuck with ChatGPT, they’d lose money but not from lack of subscriptions.

The money sink is the data center infrastructure.

Let’s do some Napkin math:

Their ChatGPT subscription is $20/month & $200/month (Pro).

500 million weekly users (free & paid). 3 million business clients as of Mid Jun 2025.

If just 1% of users pay $20/month → 5M users × $20/month = $1.2B/year

If all 3M businesses pay $200/month → 3M × $200/month = $7.2B/year

That’s $8.4 billion/year using conservative estimates, but OpenAI projects $12.7 billion revenue for 2025 which means they’re pulling in revenue from areas outside subscriptions such as licenses, custom deployments, or contracts.

$8.4B/yr theoretical vs $12.7B reality.

OpenAI depends more on non-consumer subscriptions.

Their data centers cost $30B annually

OpenAI is paying Oracle $30B annually to build a data center powerful enough to run 2 Hoover Dams.

And that’s just 1 data center project.

They don’t publicly disclose all data center projects, but they are expensive due to GPUs, cooling, and 24/7 operation.

With a projected revenue of $12.7B and costs of $30B, OpenAI is in the red.

If they wanted to double down on the AI Chatbot market, they'd have to increase subscription prices. Which they plan on doing anyways.

By the end of 2025, OpenAI plans to increase the price for their Pro to $22/month & expect to hit $44/month by 2029.

But since they can't hike prices too quickly, another way to get funding is to dangle disruption and innovative tech at the mouths of Silicon Valley VCs to snag a couple billion in funding.

It's a tactic Elizabeth Holmes weaponized for Theranos and it works beautifully.

Just check out the recent headlines of how much money is being dumped following the hype.

From these headlines and napkin math, it seems the goal is to integrate AI into every facet of life to justify the costs of the data centers.

So, what does this mean for the future of AI?

Either they find a solid problem to solve that returns enough to justify their costs, or they'll understand what free market means for once.

Is AI a Solution looking for a Problem?

The panic around AI isn't really about AI broadly.

We've had AI for decades in self-driving cars, video games, and navigation. Nobody freaked out about Google Maps or chess computers.

The panic started when LLMs stepped into the creativity space.

Previous AI handled tasks we gladly outsource, number crunching, route optimization, pattern recognition. But LLMs "replace" what we thought was uniquely human.

Now the hammer not only hammers nails but also writes poetry and crunches numbers.

The hammer talks back. “There is no nail.”

Companies like OpenAI, Meta, Google, Microsoft, and Anthropic have run with LLMs, creating chatbots to augment our creativity while also branching out into as many industries as possible from education to management to medicine to human resources.

What problems do we actually need LLMs to solve?

Speaking from just a business sense, a business is a service to solve a problem. If it's not solving a problem, then it will go out of business.

And that's what these companies are realizing.

The AI LLM chatbots aren't profitable enough, so they're branching out even if it doesn't require an AI in it.

They're going against their own business advice.

Even Peter Thiel says in his book “Zero to One” to dominate one specific industry, to monopolize, not target everything all at once.

But Sam Altman, founder of OpenAI, says they can cure cancer, improve society, and reach the stars.

Which is against everything any novice entrepreneur learns in their first year.

So why are these companies investing in medical, AI glasses, and personalized AI tools instead of investments in improving their current chatbots such as hallucinations, bias, and inaccuracies?

For example, Microsoft published a study on AI being better than doctors. But if you read the study, the headline is not accurate.

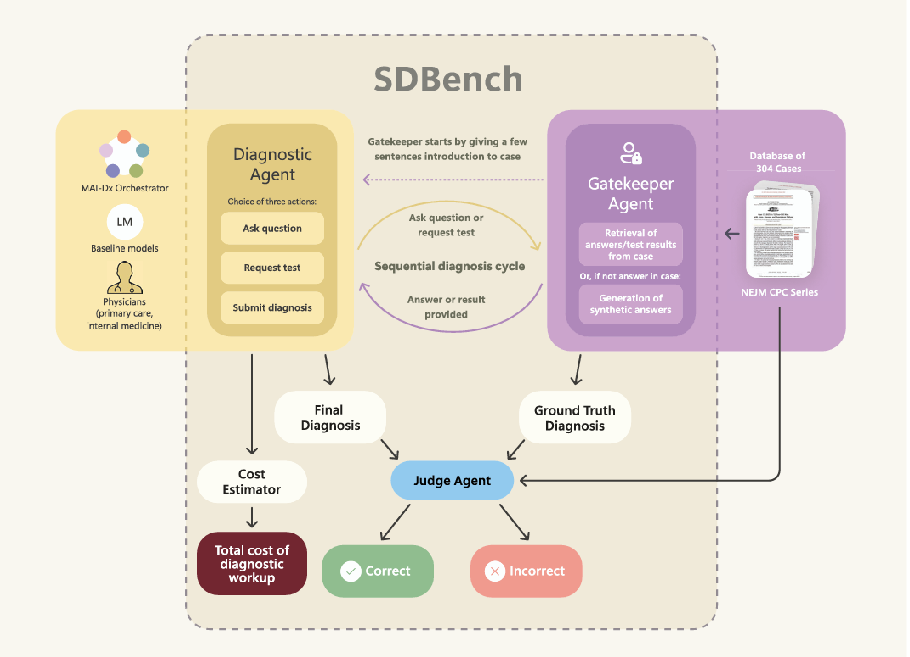

Microsoft tested a cohort of U.S. and U.K. physicians against a diagnostic multi-AI agent called MAI-DxO who could accurately diagnose the patient. MAI-DxO is an ensemble of LLMs such as GPT-4, Claude, Grok, Gemini, etc working together to simulate a panel of physicians.

They took 304 challenging medical cases from the New England Journal of Medicine and had both the doctors and multi-AI agent systems diagnose and treat the patients.

Their evaluation process Sequential Diagnosis Benchmark (SDBech) is below.

MAI-DxO (diagnostic AI) asks questions of the gatekeeper AI. Then a Judge AI, another LLM, scores their final diagnosis on accuracy, relevance, and reasoning.

MAI-DxO had 5 other AIs to bounce ideas off of while the Gatekeeper model revealed info sequentially, something the physicians had no access to. MAI-Dxo could ask 5 questions per turn while doctors were limited to 1. The AI had access to a gatekeeper model with all clinical info. Doctors worked alone with no online resources. The opposite of real medical practice.

The more I read the paper, the more it becomes less a study comparing diagnostic skills of AI and physicians and more of a game tailored to the AI’s strengths.

It’s similar to testing a self-driving car safety on sunny days with no one on the road. Performance makes for a great headline, but not good enough in practice.

Just more hype to pull funding towards them.

There's a 3rd option to survive: deregulating everything.

They need access to all data, and the US has specific laws protecting consumer data.

Right now Anthropic and OpenAI are facing lawsuits against illegal copywriting and piracy.

Anthropic's case is a $1.5B piracy lawsuit that could end the company if they lose. OpenAI has multiple mini lawsuits.

OpenAI alone spent $1.8 million on lobbying in the first half of 2025, a 30% increase year-over-year. Meta dropped $13.8 million. Combined, the big AI companies spent $36 million targeting specific deregulation efforts: a federal 10-year moratorium on state AI regulation, expanded copyright fair use for AI training data, and rolling back privacy laws that limit data scraping.

And this was before the White House's "AI Action Plan" that directs agencies to eliminate rules "impeding AI. They’re desperate to change those laws because it would make everything easier. No copyright. No piracy. No privacy issues.

Executive Order 14192 of January 31, 2025, “Unleashing Prosperity Through Deregulation,” work with all Federal agencies to identify, revise, or repeal regulations, rules, memoranda, administrative orders, guidance documents, policy statements, and interagency agreements that unnecessarily hinder AI development or deployment.

But let’s say they lose those court cases.

What will happen to the companies built on top of ChatGPT, Claude, copilot, or Gemini if OpenAI or Anthropic, Microsoft AI, or Google's AI team goes under?

Look at the dot com companies in the early 2000s.

All these factors make you wonder if AI is a solution looking for a problem & does it need to be everywhere?

Turns out the most revolutionary thing about AI might be knowing when not to use it.